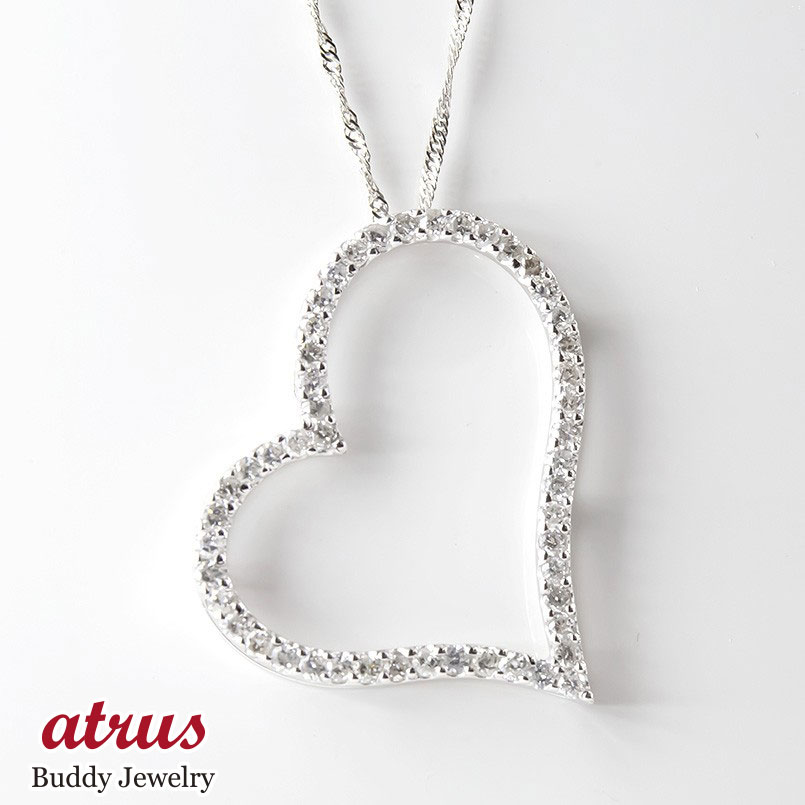

14 Kポリッシュダイヤモンドカットパフハートペンダント

(税込) 送料込み

商品の説明

商品情報

ご覧いただき誠にありがとうございます。原則、海外倉庫からの手配となりますので、通常2〜14営業日ほどお時間を頂いております。また、海外倉庫からの手配の場合、稀に税関で開封がされる場合がございますので、予めご理解の上、ご購入をお願いいたします。

25795円14 Kポリッシュダイヤモンドカットパフハートペンダントファッション腕時計、アクセサリーK18ペンダントトップ/ハートカット10㎜ | モルダバイト通販専門店14 Kポリッシュダイヤモンドカットパフハートペンダント 売れ筋

Amazon.co.jp: 14 K黄色ゴールドダイヤモンドカットパフハート

Amazon.co.jp: 14 K黄色ゴールドダイヤモンドカットパフハート

14 Kポリッシュパフハートペンダント 直売会場 腕時計

Amazon.co.jp: 14 K黄色ゴールドダイヤモンドカットパフハート

Amazon.co.jp: 14 K黄色ゴールドダイヤモンドカットパフハート

Amazon.co.jp: 14 K黄色ゴールドダイヤモンドカットパフハート

Amazon.co.jp: 14 K黄色ゴールドダイヤモンドカットパフハート

Amazon.co.jp: 14 K黄色ゴールドダイヤモンドカットパフハート

14 Kとロジウムダイヤモンドカットハートペンダント - レディース

14 Kローズ&ホワイトゴールドダイヤモンドカットハートペンダント

14 Kとロジウムダイヤモンドカットハートペンダント - レディース

14 Kとロジウムダイヤモンドカットハートペンダント - レディース

Amazon.co.jp: 14Kサテン&ポリッシュパフハートペンダント : Home

14 Kとロジウムダイヤモンドカットハートペンダント - レディース

ダイヤのぷっくりハートペンダントトップ – シルバームーン彫金工房

Amazon | 14 K黄色ゴールドダイヤモンドカットパフハートペンダント

ペンダントトップ ハート型M(ファセットカット有) – H.P.FRANCE公式

ダンシングストーン ダイヤモンド ネックレス 一粒 0.3ct 鑑定書付

K18 WG オーロラ 天女 9.0ミリ ペンダント ネックレス with ダンシング

K18 WG オーロラ 天女 9.0ミリ ペンダント ネックレス with ダンシング

14 Kとロジウムダイヤモンドカットハートペンダント - レディース

ハートシリーズ- 人工ダイヤモンド専門店 Angel peach

ペンダントトップ ハート型M(ファセットカット有) – H.P.FRANCE公式

アレクシス ビッター レディース ピアス・イヤリング アクセサリー

K18ペンダントトップ/ハートカット10㎜ | モルダバイト通販専門店

K18ペンダントトップ/ハートカット10㎜ | モルダバイト通販専門店

20091163 ミヌー ネックレス ホワイトゴールド ハートシェイプ

ハートシリーズ- 人工ダイヤモンド専門店 Angel peach

ダンシングストーン ネックレス ダイヤモンド 一粒 プラチナ 0.2

割引購入 オープンハート PT900 ダイヤモンド0.5 ペンダントトップ

ライトカラーオレンジムーンストーンブレスレット・AAAグレード(3A

ダンシングストーン ネックレス ダイヤモンド 一粒 プラチナ 0.2

【楽天市場】Pt ハートプラチナペンダントトップ(14mm) (Pt900

ダンシングストーン ダイヤモンド ネックレス 一粒 0.3ct 鑑定書付

14 Kとロジウムダイヤモンドカットハートペンダント - レディース

K18ペンダントトップ/ハートカット10㎜ | モルダバイト通販専門店

ダンシングストーン ダイヤモンド ネックレス 一粒 0.3ct 鑑定書付

Pt950 SUWA ダイヤモンド 0.75ct マーキースカット ペンダントトップ

crssfor公式】【鑑別書付き】ネックレス ハート&キューピッド

商品の情報

メルカリ安心への取り組み

お金は事務局に支払われ、評価後に振り込まれます

出品者

スピード発送

この出品者は平均24時間以内に発送しています